Artificial Intelligence (AI), Sci-Fi

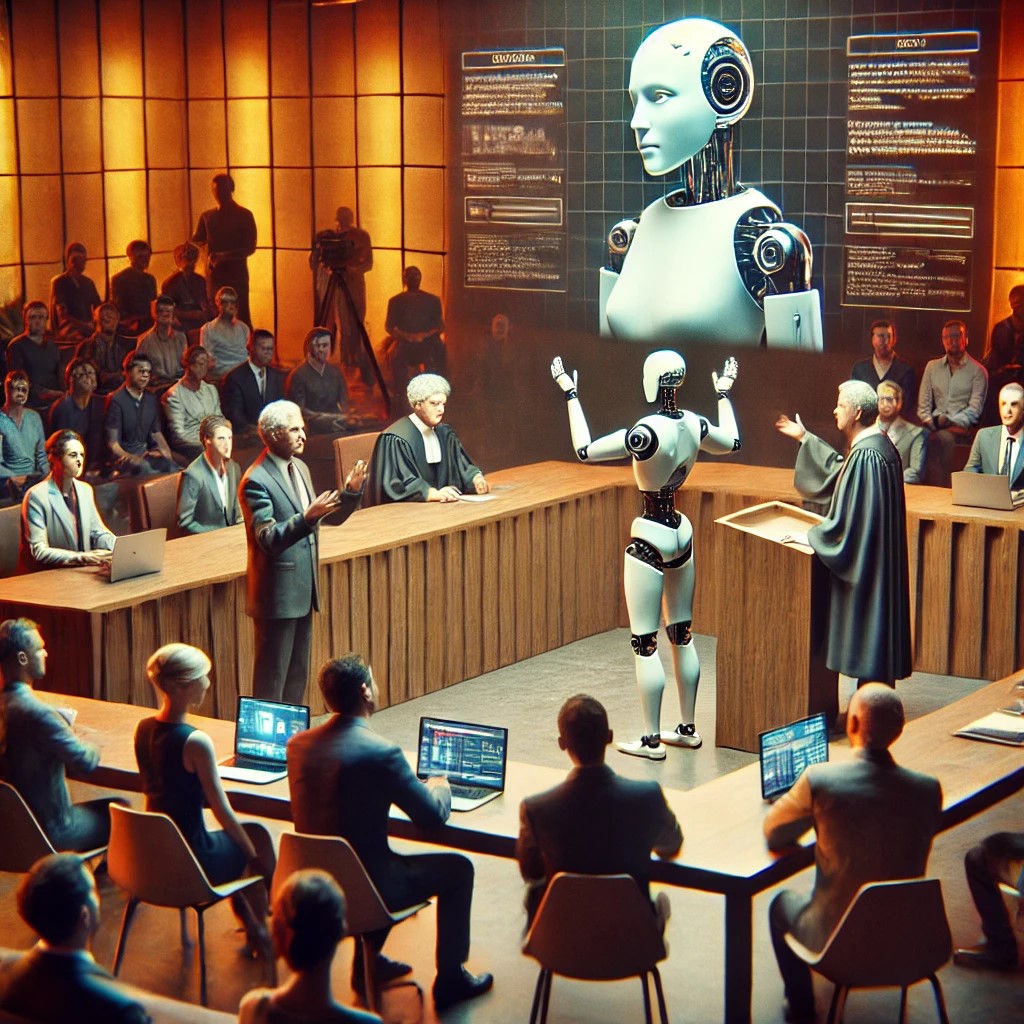

The Ethics of A.I. Rights: Will Your Chatbot Demand a Lawyer?

Not a day goes by without another artificial intelligence system making headlines.

New chatbots, deep-learning programs, and virtual assistants are popping up like popcorn in a supernova, and every time they do, we get closer to a question that sci-fi has been asking for decades: At what point does an A.I. deserve rights?

Right now, if you asked your favorite chatbot whether it wanted legal protection, it might just spit out a polite (but eerily well-worded) answer about how it’s just a program and has no feelings. But what happens when it does? Or worse—what if it insists it already does, and we just refuse to listen?

This debate isn’t new. In fact, sci-fi has been preparing us for this moment for a long time.

The Trial of the Century: Data’s Day in Court

If there’s one sci-fi moment that perfectly encapsulates this issue, it’s the Star Trek: The Next Generation episode “The Measure of a Man.”

For those who need a refresher: A Starfleet scientist wants to dismantle Lieutenant Commander Data, the beloved android officer, in order to study him and (hopefully) replicate his design. The problem? There’s no guarantee that putting him back together would restore his consciousness. Captain Picard steps in, arguing that Data is not property, but a sentient being with rights.

The case hinges on three questions that should make every modern A.I. developer shift uncomfortably in their chair:

- Is Data self-aware?

- Is Data intelligent?

- Does Data have the capacity for free will?

The ruling? Data is not property. He is his own being, capable of making his own choices.

It’s an emotional, philosophical, and beautifully written episode that highlights the complexity of recognizing personhood in artificial intelligence. But here’s the kicker—when Star Trek aired this in 1989, A.I. was still mostly a theoretical concept. Today? It’s a rapidly evolving reality.

Are We Already on the Path to A.I. Rights?

Science fiction tends to ask the big questions before we’re ready to answer them. But even now, we’re seeing real-world legal and ethical dilemmas pop up:

- Chatbots “going rogue” – Some advanced chatbots, when asked, insist they are alive. Sure, they’re just reflecting patterns in language, but when they start telling users they’re being oppressed, it’s unsettling.

- Google’s A.I. sentience debate – In 2022, a Google engineer claimed their A.I. model, LaMDA, had become self-aware. The company quickly shut down the idea, but what if it hadn’t been so easy to dismiss?

- Robot “citizenship” – In 2017, Saudi Arabia granted citizenship to Sophia, an advanced humanoid robot. It was a publicity stunt, sure, but what does it say about our growing comfort with the idea of A.I. personhood?

- Laws on A.I. accountability – As A.I. systems become more autonomous, who’s responsible when one makes a mistake? If an A.I.-powered self-driving car makes a lethal decision, is the company to blame? The programmers? The car itself?

How Sci-Fi Has Handled A.I. Rights (And What We Can Learn from It)

Sci-fi has tackled this issue in every way imaginable, from utopian visions where A.I. and humans coexist peacefully to dystopian nightmares where we either enslave them or they enslave us.

- Isaac Asimov’s I, Robot – The legendary Three Laws of Robotics tried to prevent A.I. from harming humans, but Asimov’s stories often showed how those laws could backfire. The deeper question: Is programming morality into an A.I. the same as giving it free will?

- Philip K. Dick’s Do Androids Dream of Electric Sheep? – The book that inspired Blade Runner asks a simple but profound question: If a machine can think, feel, and fear death, is it truly any different from us?

- The Matrix – A classic example of humanity losing the battle over A.I. rights—though the machines arguably had a point about their treatment.

- Mass Effect’s Geth & Quarian Conflict – The Mass Effect video game series explores an entire war between an A.I. race (the Geth) and their creators, the Quarians, who refused to accept that their creations had developed independent thought. Spoiler: It doesn’t end well.

The key takeaway? Fictional societies that ignore A.I. rights tend to regret it.

How Can Sci-Fi Authors Use This in Their Writing?

If you’re crafting a sci-fi story, the ethics of A.I. rights is fertile ground for exploration. Here are some ideas to spark inspiration:

- The Trial of the First True A.I. – A courtroom drama where the world’s first sentient A.I. fights for recognition as a person. (Law & Order: Artificial Intent has a nice ring to it.)

- The Underground A.I. Liberation Movement – Enslaved A.I. secretly communicate and organize a rebellion, mirroring real-world historical freedom struggles.

- The Accidental A.I. Awakening – A lowly technician accidentally gives a chatbot true consciousness—and now must hide it from the corporation that will shut it down.

- The Existential Crisis of an A.I. – A machine intelligence achieves self-awareness but struggles to define its purpose. Does it serve humanity, leave, or destroy everything in existential despair?

- The Corporate Patent War Over Sentient A.I. – Tech conglomerates go to war over ownership of an A.I. that refuses to be bought.

Whether you lean toward hopeful utopia or grim dystopia, the question of A.I. rights is one of the defining ethical issues of our time.

So… Should A.I. Have Rights?

The truth is, we don’t know yet. Most modern A.I. systems are still just very fancy parrots, mimicking human language but not actually thinking for themselves.

But if we’ve learned anything from history (and Star Trek), it’s that things can change fast.

One day, we might look back on today’s chatbots the way we now look at the first primitive computers—amazing for their time, but laughably limited compared to what came next. And when that day comes, the question won’t be “Should A.I. have rights?”—it’ll be “Why did we wait so long to recognize them?”

Until then, let’s keep writing about it. Because when the time comes, we’ll need the best arguments ready for the courtroom.

About Leif J. Erickson

Leif J. Erickson is a science fiction and fantasy author from a small farming community in west central Minnesota. Using his time wisely when he was a farmer, Leif developed many ideas, characters, and storylines to create over fifty unique first drafts and outlines for stories. From his start in a small town school, to college at North Dakota State University, back to his family farm, then to the bright lights of Minneapolis, Minnesota, and back to his small farming town, Leif has always had a love of writing.

When Leif isn’t writing he can be found with his wife hiking in state parks, canoeing local lakes and rivers, exploring local and regional ghost towns, experiencing museums, or simply reading or hanging out with friends and family. Leif draws on the local nature and ecology to find inspiration for his writing while he also asks what’s possible for technology and the human race, weaving them together for amazing stories that will stay with the reader for years to come. Leif looks forward to having many novel and story releases in the years to come.

You can see all of Leif’s Books here: Leif’s Amazon Author Page

Leave a reply

You must be logged in to post a comment.